Algorithms have invaded almost all corners of life: they mediate social interaction, facilitate governance, transact business, and more. While the domain of algorithmic control continues to grow, algorithmic modes of operation still remain locked away from our understanding. Society feels the tangible effects of algorithms—including their negative externalities that take the form of discrimination by race and gender, the weakening of democracy, reproduction of inequality, etc.—but we do not fully know how these effects came to be. The first step towards dismantling and fighting against such harms is comprehensive knowledge-building on how algorithms work, not just in their conventionally-understood capacity as mathematical instruments but as socio-technical systems with multifarious societal consequences.

If we hope to curb automated bias and algorithmic negative externalities, then we must confront the present challenges of knowing algorithms, recognizing them as opaque, complex, and contingent systems that evade simple analysis.

Opacity

First, algorithms are difficult to know because they are purposefully opaque. Unless one is dealing with open-source software through a repository like Github, it is nearly impossible to view the internal workings of an algorithm. This is because most algorithms are proprietary in nature (Kitchin 2017). Within most online industries—search engines, social media, recommendation services, advertising, etc—algorithms constitute the cornerstone of business. A firm’s value and competitive advantage is dependent upon the efficacy and problem-solving ability of their algorithm (ibid). As countries around the world transition to full-fledged databased economies, more businesses possess the incentive to guard their algorithms like trade-secrets, releasing minimal details but never fully divulging the inner workings of their systems to the public.

Kitchin (2017) argues that such opacity creates substantial hurdles for researchers. He puts it succinctly: “the problem for experimentation is that we are not insiders (ibid).” While ‘insiders’ (e.g. engineers and executives) at companies have access to a transparent, panoptic view of the algorithm, researchers and the public do not. We can only see the output, the consequences of the algorithm. Working around this, researchers often study algorithms by reverse-engineering them, a difficult and time-consuming project where one deconstructs the system by methodically experimenting with different input values in order to tease out relationships from the output values. Unfortunately, even tactics like reverse-engineering are not sufficient to study algorithms given the systems’ complexity and contingency.

Complexity

Algorithms can be incredibly complex– so complex that “Not Even the People Who Write Algorithms Really Know How They Work” (Lafrance 2015). In an article with the Atlantic, Andrew Moore, the dean of computer science at Carnegie Mellon University and a former vice president at Google, problematizes “how much the content-providers understand how their own systems work (ibid).” Even though executives and engineers (‘insiders’) might have a clear, encompassing perspective of an algorithm’s code, they still might not understand the complete functionality of their systems, including their diverse and unanticipated social repercussions.

Furthermore, what we often think of as a singular algorithm is actually an algorithmic system, or an assemblage of algorithms woven together that includes “potentially thousands of individuals, data sets, objects, apparatus, elements, protocols, standards, laws, etc. that frame their development” (Kitchin 2017). Algorithmic systems are complex because they are heterogeneous (they look and behave differently from one another) and they are embedded with other algorithms (ibid). Opening up an algorithmic system reveals a messy patchwork structure that calls upon “hundreds of other algorithms” (ibid). Decades ago, Foote and Yoder (1997) compared code to a ‘Big Ball of Mud’, or ‘[a] haphazardly structured, sprawling, sloppy, duct-tape and bailing wire, spaghetti code jungle’.

Seaver (2014) builds on that comparison of non-straightforward programming, asserting that “algorithmic systems are not standalone little boxes, but massive, networked ones with hundreds of hands reaching into them, tweaking and tuning, swapping out parts and experimenting with new arrangements.”

Contingency

In addition to being complex and opaque, algorithms challenge researchers because they are contingent systems, or dependent on time and space and constantly adapting to the contextual inputs presented to them. Because machine-learning algorithms incorporate and learn from data sets in real-time (in turn, changing their internal logics in real-time), it’s impossible to assume that one interacts with the same algorithm twice (Seaver 2014). Through personalization, they also mutate, react, and tailor themselves according to a user’s unique profile and behavior, rendering the method of single-user experimentation obsolete (ibid).

Seaver (2014) gives an example of how this feature plays out in reality: an unnamed online radio company does not deploy just one algorithm to curate song playlists, rather there are 5 master algorithms (each utilizing different internal logics) that are assigned to listeners based on their behavioral-listening profile. This means that listeners and researchers can never be sure which algorithm they have interacted with, as well as whether their individual interaction with an algorithm can ever be repeated. Such uncertainty ensures that whatever knowledge has been collected regarding the algorithm “cannot be simply extrapolated to all cases” (Kitchin 2017).

Conclusion

Algorithms are not inherently impossible to understand, and pretending that they are shields algorithm-developers from the full responsibility of building such powerful machines (Kroll 2018).

Recognizing algorithms as opaque, complex, and contingent systems that often defy traditional research methods does not absolve us—as internet users, researchers, and citizens of the 21st Century—from our responsibility to investigate them and constrain their ill effects. Instead, it should invigorate us to press further.

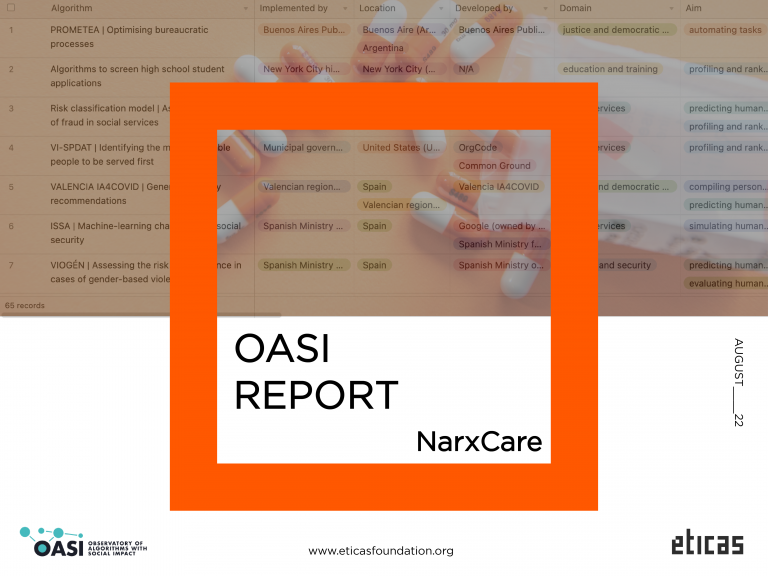

As automated decision-making tools continue to shape our lives, Eticas Foundation is committed to confronting the challenge(s) to understanding algorithms. Through OASI (Observatory of Algorithms with Social Impact), we hope to advance public understanding of algorithms by creating an accessible, searchable database that gathers and sorts real stories of algorithms and their human impact(s) from around the world. Check out OASI here.

Bibliography:

Foote, B., & Yoder, J. (1997). Big Ball of Mud. Pattern Languages of Program Design, 4, 654–692.

Kitchin, R. (2017). Thinking critically about and researching algorithms. Information, Communication & Society, 20: http://mural.maynoothuniversity.ie/11591/1/Kitchin_Thinking_2017.pdf

Kroll, J. (2018). The fallacy of Inscrutability. Phil. Trans. R. Soc. A 376: 20180084

https://royalsocietypublishing.org/doi/10.1098/rsta.2018.0084

Lafrance, A. (2015). Not Even the People Who Write Algorithms Really Know How They Work. The Atlantic https://www.theatlantic.com/technology/archive/2015/09/not-even-the-people-who-write-algorithms-really-know-how-they-work/406099/

Seaver, N. (2014). Knowing algorithms. Media in Transition 8, MIT