What happens when data are inaccurate, incomplete our outdated?

In other words, what happens when Big Data becomes Bad Data?

#BadData wants to collect personal stories and reports about the social, ethical or economic consequences of bad and misused data.

Send your Bad Data experience.

Thanks to artificial intelligence, algorithms can be trained by and learn from data. But what an algorithm does depends a lot on how good the data is. Data could be corrupted, out of date, useless or illegal. In this way, bad data plays an important part of all kinds of decision-making processes and outcomes.

#BadData

From banking to health to social services or education, bad data can have an important impact on our most fundamental rights. At the bottom of this page you’ll find a reading list filled with concrete examples of how bad data is already impacting society.

Facing competition from the sweeter-tasting Pepsi Cola in the mid-1980s, Coca-Cola tested a new formula on 200,000 subjects.

Similar to the case of disability payments in the UK, in the United States there have been a number of cases where radical readjustments were made to home care received by people with a broad range of illnesses and disabilities, after algorithmic assessment was introduced.

Starting in 2016, the number of appeals against decisions made by the Department of Work and Pensions on the basis of assessments made by the private, profit driven contractors working on its behalf began to increase dramatically.

Cambridge Analytica, a private company, was able to harvest 50 million Facebook profiles and use them to build a powerful software program to predict and influence election choices.

Deloitte Analytics conducted a survey testing how accurate commercial data used for marketing, research and product management is likely to be.

In 2000, a problem with Washington, DC’s drinking water began when officials switched the disinfectant they used to purify the water. The switch was supposed to make the water cleaner. But the change also increased corrosion from the city’s lead pipes, upping the amount of lead in the water.

Police are increasingly using predictive software. This is particularly challenging because it is actually quite difficult to identify bias in criminal justice prediction models. This is partly because police data aren’t collected uniformly, and partly because the data police track reflect longstanding institutional biases along income, race, and gender lines.

Launched in 2008 in the hopes of using information about people’s online searches to spot disease outbreaks, Google’s Flu Trend would monitor users’ searches and identify locations where many people were researching various flu symptoms. In those places, the program would alert public health authorities that more people were about to come down with the flu.

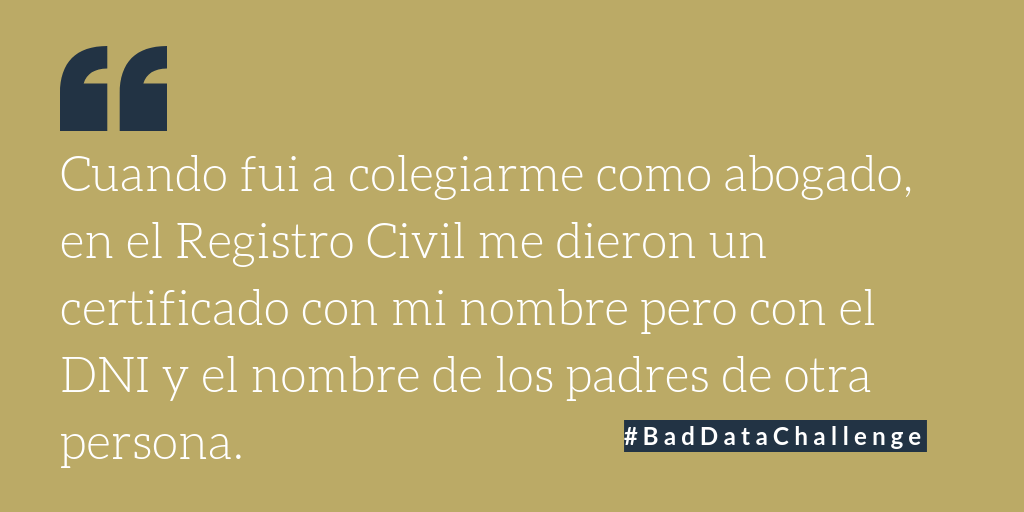

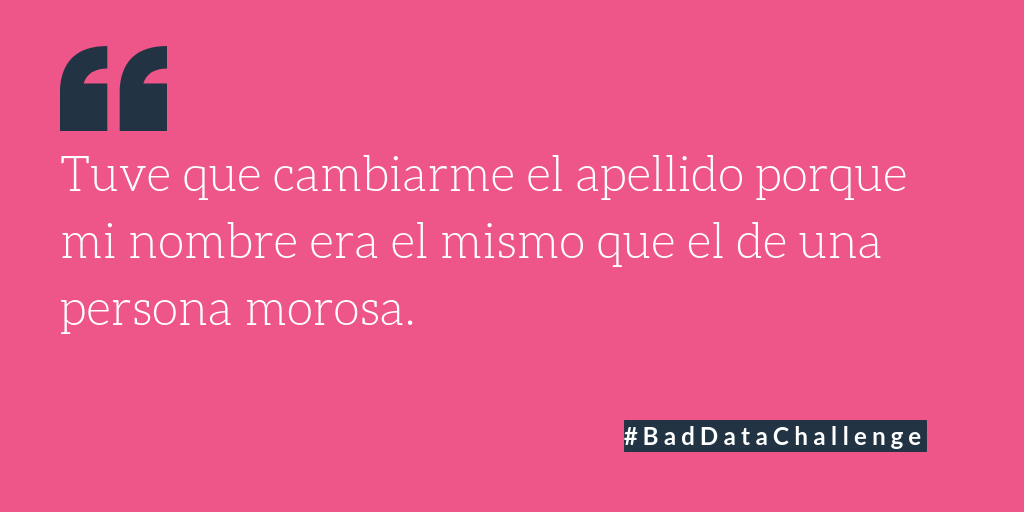

In 2018 Eticas started a #BadDataChallenge and the results were overwhelming because by simply asking themselves, people realized that at least once in their life they confronted a BadData. Some ended up paying for the health insurance of another person, others had wrong names of the parents in their ID, which complicated their identification process constantly.

Unless otherwise noted content on this site is licensed under a Creative Commons Attribution 4.0 International License

Facing competition from the sweeter-tasting Pepsi Cola in the mid-1980s, Coca-Cola tested a new formula on 200,000 subjects. It beat Pepsi and the classic formula time after time in a series of taste tests. Yet the market research focused entirely on taste, ignoring several other factors that motivate people to purchase Coca Cola. Because marketers didn’t consider the classic formula’s relation to the larger brand, the company lost tens of millions of dollars and had to pull New Coke from the shelves.

Source:

https://www.utopiainc.com/resources/blog/how-bad-data-changed-the-course-of-history

Similar to the case of disability payments in the UK, in the United States there have been a number of cases where radical readjustments were made to home care received by people with a broad range of illnesses and disabilities, after algorithmic assessment was introduced.

While most reporting on this has focused on the algorithms and their codes, important problems with the assessments were also found. Kevin De Liban, an attorney for Legal Aid of Arkansas, started keeping a list of these. One variable in the assessment was foot problems. When an assessor visited a certain person, they wrote that the person didn’t have any problems — because they were an amputee. Over time, De Liban says, they discovered wildly different scores when the same people were assessed, despite being in the same condition.

Source:

https://www.theverge.com/2018/3/21/17144260/healthcare-medicaid-algorithm-arkansas-cerebral-palsy

Starting in 2016, the number of appeals against decisions made by the Department of Work and Pensions on the basis of assessments made by the private, profit driven contractors working on its behalf began to increase dramatically. There were 60,600 Social Security & Child Support appeals between October and December 2016, a 47% increase. Roughly 85% of those appeals were accounted for by the Personal Independence Payment (PIP) and the Employment & Support Allowance (ESA).

It was not just the number of appeals that increased rapidly, either. The rate at which decisions made by the DWP also rose substantially to almost two-thirds of all appeals. Clearly, there was a problem with the assessment process. On the one hand, the weighting of different criteria for eligibility in the Personal Independence Payments program was changed. On the other hand, the people hired by private firms to carry out PIP assessments apparently altered data, with clearly discriminatory effects. As a result, the DWP spent millions on appeals, and a total of 1.6 million disability benefit claims will be reviewed.

http://www.bbc.com/news/health-41581060

http://www.bbc.com/news/uk-politics-35861447

Cambridge Analytica, a private company, was able to harvest 50 million Facebook profiles and use them to build a powerful software program to predict and influence election choices. Data was collected thanks to an application: users were paid to take a personality test and agreed to have their data collected for academic use. However, this data, and that of their friends, were then used to build the software, thus violating Facebook’s “platform policy”, which allows collection of data to improve user experience in the app and barred it being sold on or used for advertising. Even though the responsibility of each side are not yet totally clear, this case shows the illicit use of personal data as consequence of poor and unlawful practices/policies in the collection and elimination of data.

Deloitte Analytics conducted a survey testing how accurate commercial data used for marketing, research and product management is likely to be. They found that:

Source:

https://www2.deloitte.com/insights/us/en/deloitte-review/issue-21/analytics-bad-data-quality.html

Police are increasingly using predictive software. This is particularly challenging because it is actually quite difficult to identify bias in criminal justice prediction models. This is partly because police data aren’t collected uniformly, and partly because the data police track reflect longstanding institutional biases along income, race, and gender lines.

While police data are often described as representing “crime,” that’s not quite accurate. Crime itself is a largely hidden social phenomenon that happens anywhere a person violates a law. What are called “crime data” usually tabulate specific events that aren’t necessarily lawbreaking—like a 911 call—or that are influenced by existing police priorities, like arrests of people suspected of particular types of crime, or reports of incidents seen when patrolling a particular neighborhood.

Neighborhoods with lots of police calls aren’t necessarily the same places the most crime is happening. They are, rather, where the most police attention is. And where that attention focuses can often be biased by gender and racial factors.

A recent study by the Human Rights Data Analysis Group found that predictive policing vendor PredPol’s purportedly race-neutral algorithm targeted black neighborhoods at roughly twice the rate of white neighborhoods when trained on historical drug crime data from Oakland, California. Similar results were found when analyzing the data by income group, with low-income communities targeted at disproportionately higher rates compared to high-income neighborhoods. This was despite the fact that estimates from public health surveys and population models suggest that illicit drug use in Oakland is roughly equal across racial and income groups. If the algorithm were truly race-neutral, it would spread drug-fighting police attention evenly across the city.

Similar evidence of racial bias was found by ProPublica’s investigative reporters when they looked at COMPAS, an algorithm predicting a person’s risk of committing a crime, used in bail and sentencing decisions in Broward County, Florida, and elsewhere around the country. These systems learn only what they are presented with; if those data are biased, their learning can’t help but be biased too.

Source:

https://www.fastcompany.com/40419894/how-big-bad-data-could-make-policing-worse

Launched in 2008 in the hopes of using information about people’s online searches to spot disease outbreaks, Google’s Flu Trend would monitor users’ searches and identify locations where many people were researching various flu symptoms. In those places, the program would alert public health authorities that more people were about to come down with the flu.

But the project failed to account for the potential for periodic changes in Google’s own search algorithm. In an early 2012 update, Google modified its search tool to suggest a diagnosis when users searched for terms like “cough” or “fever.” On its own, this change increased the number of searches for flu-related terms. But Google Flu Trends interpreted the data as predicting a flu outbreak twice as big as federal public health officials expected, and far larger than what actually happened. This is a good case of bad data because it involves information biased by factors other than what was being measured.

Sources:

https://www.fastcompany.com/40419894/how-big-bad-data-could-make-policing-worse