We humans are incredibly good at facial recognition: normally, we are able to tell whether we know someone by looking at their face for less than half a second, and we can recognise people’s faces even when we can’t remember other details about that person, like their name or their job. Scientists believe that’s the case because, as highly social animals, we evolved the need to keep tabs on many different individuals, so that we could quickly identify who is a friend and who is a potential rival or enemy.

As with many other things, machines and algorithmic systems can process images much faster than humans. Does that mean that algorithms are better than humans at identifying faces? The answer is complicated, because when it comes to facial recognition the issue is not that much speed but accuracy: the most advanced algorithms are as good as humans at identifying faces in clear pictures and other lab conditions, but humans are better at identifying faces in real-life conditions and in bad quality images. And as it happens with many other things, the current indications are that the best results are obtained when humans and algorithms work together.

However, the most problematic dimension of automated facial recognition is that, when algorithms get it wrong, they tend to get it systematically wrong according to some worrying but also by now usual patterns.

AI and algorithmic systems are trained on existing data: in the case of facial recognition, an automated system learns to identify new images of faces by going through huge amounts of existing pictures of faces that have been already identified. And more often than not, existing datasets are biased and overrepresent white people, and in this case white men especially. And that means that facial recognition algorithms trained on such datasets become good at identifying the faces of white men, and become systematically less good at identifying faces of people other than white men.

While automated facial recognition may have quite a few different potential uses (both lawful and unlawful, and ethical and unethical), so far we find this kind of algorithms being used most prominently by police and other law enforcement agencies. That is the case with the five algorithmic systems currently listed in the OASI Register as having “identifying images of faces” as one of their aims.

The most veteran of those algorithms in the Register is the facial identification system used since 2010 by the New York Police Department. Reportedly, the NYPD has normalised this algorithm as part of its operations, and uses it in routine investigations as well as in criminal ones.

Despite the need for transparency when dealing with algorithms known to discriminate particular groups of people, in 2018 the media reported that the NYPD had been denying information for two years about how the police use this algorithm to researchers at the Georgetown Center for Privacy and Technology.

Another entry in the Register, the DataWorks Plus facial recognition software, provides a concrete example (maybe the first of its kind) of what may happen when facial recognition technology doesn’t work properly in the hands of the police: a man got arrested for a crime he didn’t commit.

In January 2020, Robert Williams was arrested and handcuffed by the police just outside his home in a Detroit suburb, in front of his wife and two daughters. Williams was taken to the police station and interrogated as a suspect in a robbery because the DataWorks Plus facial recognition algorithm used by the Detroit Police had incorrectly matched his face with video footage of the robber, while to the human eye it was clear that the robber and Williams were two different people. Williams, who is black, was held in custody for 30 hours before finally being released on bond.

But it’s not only the police in the US who use facial recognition algorithms. The OASI Register also includes an entry about SARI, an automated image recognition system used by the Italian national police. While there’s not much information about how exactly SARI might have been used, in April last year the Italian data protection authority issued an opinion in which it alerted about its potential fallout: “As well as lacking a legal basis to legitimise the automated processing of biometric data for facial recognition in security applications, the system as currently designed would enable mass/blanket surveillance.”

A particular area when it seems police and law enforcement agencies are using more and more facial recognition is at the border. One such example in the OASI Register is Simplified Arrival, an algorithm used by the US Customs and Border Protection agency (CBP) since at least 2018.

The CBP insists that the algorithm merely checks whether the picture taken of the person trying to enter the US matches their picture on their passport and on any other ID documents they might have submitted during the visa process. However, because of the discriminatory potential of facial recognition algorithms, even those defending its use, like this report by the Atlantic Council think-tank, called on the US Administration to address “(the) fear of a surveillance state”, “data protection standards” and “accuracy and bias issues”.

While police and other security forces seem to be at the forefront of using facial recognition, such algorithms are also used by private commercial companies. That’s the case of Rekognition, a facial recognition software developed by Amazon, which proved to err systematically when trying to identify people of colour, according at least two different studies in 2018 and 2019. Rekognition is used by police forces and also by very different kinds of companies in the private sector, from media companies to marketing agencies and online marketplaces, according to a list maintained by Amazon.

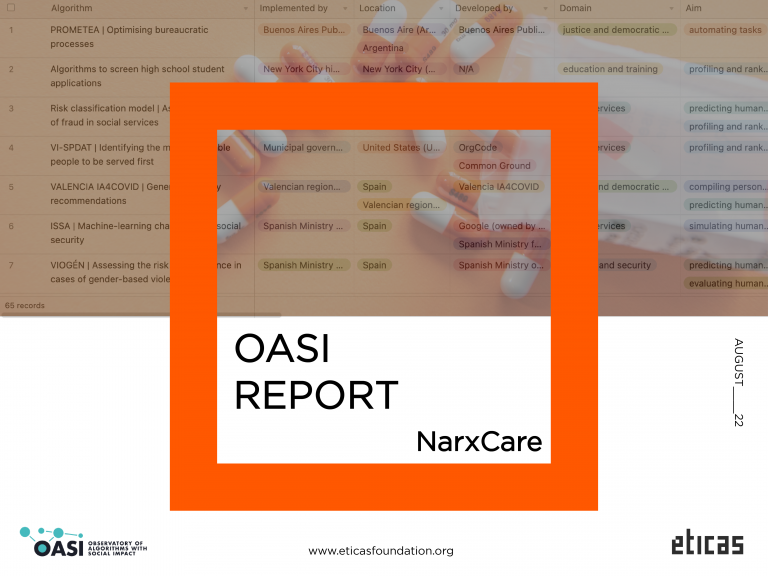

Because of their potential for negative social impact, algorithms should be explainable, so that we all affected by their use can understand how the algorithms work, and so that we can hold those responsible to account. And Eticas Foundation’s OASI project is an effort in that direction. On the OASI pages you can read more about algorithms and their social impact, and in the Register you can browse an ever-growing list of algorithms sorted by different kinds of categories. And if you know about an algorithmic system that’s not in the Register and you think it should be added, please let us know.