Throughout history, and still today, there have been large groups of society that have been or continue to be discriminated against for reasons such as color, origin, beliefs, sexual orientation or gender. One of the most salient and established kind of discrimination is the one affecting half of the human population: women and girls. The world as we see it today, the way the history is told and the decisions that shaped it, are highly designed by men. Caroline Criado Perez, journalist and feminist activist, said: “The result of this deeply male-dominated culture is that the male experience, the male perspective, has come to be seen as universal, while the female experience -that of half the global population, after all- is seen as, well, niche.” And, as she also said: “When we exclude half of humanity from the production of knowledge we lose out on potentially transformative insights.”

Whether it’s about education, work, the arts, science or any other kind of social and economic activity, women have been continuously restricted from access and full participation. And when women and girls did succeed, their contributions were not registered on equal terms as those of men. As a result, the documentation, information and data our societies have been producing are biased against women, who are less present and unjustly treated when compared to men in all that information.

Interestingly enough, in the field of computer science, traditionally seen as completely male-dominated, one of the most prominent pioneers was a woman, Ada Lovelace, an English mathematician who wrote what is considered the first computer programme in history (she died at the young age of 36 in 1852).

Thanks to Lovelace’s and other people’s work (including more key contributions by other women), computer programming and digital technology evolved to the point where we are today, when most of us carry supercomputers in our pockets (i.e. our smartphones) that can instantly access a big part of all the information ever produced by humans (i.e. the internet).

And thanks to the advances and proliferation of computer and digital technology, these days we are also seeing the development and deployment of more and more algorithmic and so-called artificial intelligence (AI) systems, which are used to automatise activities and run complex tasks, including by processing huge amounts of data, that would take us humans a much longer time, or that we could simply not do ourselves.

The way many of these algorithms work is by being trained on existing data, and when we speak of machine learning that means that an algorithm learns to extract patterns and other significant correlations from the data it’s fed and trained on.

But what happens if such data are biased, for example against women, as we described above?

If a company or a state body use an algorithm to offer personalised recommendations to job applicants, and if that algorithm is trained on existing data about jobs and professions, then the algorithm may recommend jobs like banker, and engineer, and plane pilot to men, while recommending jobs like cleaner, care-giver, or sales assistant to women.

And that would be because existing data show that, traditionally, women have had such jobs, and the data and the algorithm don’t start to consider that such bias is the result of social inequality and structural discrimination against women. In effect, the algorithm would be reproducing and maybe even reinforcing existing biases against women, and that’s why we speak of gender discrimination as a potential social impact of algorithms.

To make things more problematic when it comes to AI, machine learning and algorithms, most people working in those fields still today, and generally in IT and technology, are men; and that’s even more pronounced regarding top and decision-taking roles. That means that most AI programmes and algorithmic systems have been developed by men or by mostly-male teams, and that may make it more difficult to compensate for existing biases that discriminate against women.

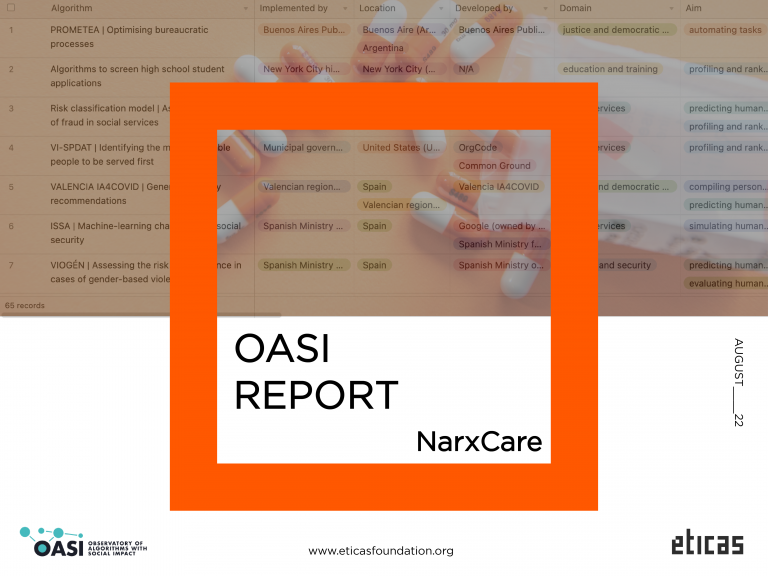

Los OASI Register can already offer an insightful overview of algorithmic systems that may result in gender discrimination. If you go to the Register and filter the “Social impact” column by the “gender discrimination” category, you get a list of quite a few entries, encompassing many of the different domains catalogued by the OASI Register and including algorithms used by some of the so-called tech giants: Google, Facebook, Amazon and Microsoft.

Google (which is owned by Alphabet) appears three times. There is the algorithm of Google AdSense, a system developed by Google for website publishers who want to advertise their services online in a targeted way (most of Google’s money comes from its advertising business). Already back in 2015, a study by academic researchers found that women were far less likely than men to be shown adverts for highly paid jobs through the Google AdSense system. The researchers concluded there was no way to know why that happened, because the AdSense algorithm was opaque (in 2013, AdSense was also found to reproduce racist biases [PDF]).

A similar trend was observed when researchers looked at the algorithm behind Google’s Image Search, which lists images found online based on the keywords entered into the search field by the user. As the OASI Register reports, in 2015 a team of academic researchers found that if you searched for “CEO” on Google Images, almost all the results were men (or, rather, white men). Likewise, if you searched for “doctor” on Google Images most of the images listed were of male doctors. However, if you searched for “nurse”, then almost all the results were of female nurses.

That was in 2015, and a casual test done while writing this article showed that the situation has barely improved: around 90% of the images shown after searching for “CEO” were of white men, and almost all the images found under “nurse” were of women. However, when searching for “doctor” the results did include a substantial number of female doctors, even though slightly more than half of the images were still of men. More in-depth research, published in February 2022, agrees that Google Image Search still has a gender bias problem (other image search engines also showed biased results, according to that research).

The third current Google example in the OASI Register is the Google Translate algorithm. In 2018, this software was also shown to reproduce discriminatory gender biases when translating non-gendered English words for jobs, like “doctor”, into gendered words in other languages, like Spanish, where it would systematically be translated as the male versions (“médico” or “doctor” and not “médica” or “doctora”). The same bias was present when translating English words like “nurse” and also “beautiful”, which would be translated into the female versions of the words in other languages. Today, this bias seems to have been corrected, and at least when translating from English to Spanish now Google Translate offers the two gendered versions of “doctor” and “nurse” as potential translations.

Still in the labour field, an internal algorithm developed by Amazon to streamline its own recruitment process was abandoned after the company discovered that the algorithm was systematically penalising women candidates and giving them a lower score simply because they were women. The reason was that the algorithm was trained on data from Amazon’s workforce and recruitment process, to which mostly men applied, and because of that the algorithmic system learnt that men candidates were preferable. After reportedly trying to fix it, Amazon discontinued the use of this algorithm in 2017.

A similar bias was observed in another internal algorithm used in recruitment, this time at Uber. In 2019, it was reported that the car-riding company had been using an algorithm to pre-select job candidates by looking at successful past applicants and other recruitment data. Given the fact that nearly 90% of Uber’s workforce was male, the algorithm started favouring male candidates too.

While Google, Facebook and Uber are well-known representatives of today’s tech companies, similar problems were already plaguing some of the earliest algorithms developed to try to make application processes more efficient. That was the case of the oldest of algorithms in the OASI Register, implemented in the 1970s at St. George’s Hospital Medical School in the UK. Back then, this school started using a computer programme to automate the applicant selection process. As it’s still today the case, the algorithm was based on the then-existing data from successful past applicants, most of whom were men. As a result, the algorithm systematically denied interviews to female applicants. The algorithm stopped being used reportedly in 1988.

Those examples show that training algorithms on historical data from the fields of labour and education (as well as many other fields) is problematic because of the implicit biases contained in such datasets. That’s why the OASI Register also flags “gender discrimination” as a potential impact of algorithms like Send@, which unlike the former cases is not from the private sector but has been developed and is being used by the Spanish Public Employment Service. While the stated aim of Send@ is to offer personalised advice to job-seekers, the fact that its algorithm is trained on data from past successful job candidates, and that we know that recruitment processes have traditionally and implicitly been discriminatory towards women, means that Send@ could inadvertently reproduce and maybe also reinforce such discriminatory biases.

Another revealing kind of bias was found in the search algorithm of Facebook (now owned by Meta). In 2019, research published in Wired magazine showed that the algorithm readily generated results when the search was “photos of my female friends” while no result was given when the search was of “photos of my male friends”. The reason was that Facebook assumed “male” was a typo for the word “female”. To make things more problematic, if you started typing “photos of my female friends” in the search box on Facebook, then the algorithm offered you to complete your search text by adding “in bikinis” or “at the beach”.

Facebook defended itself saying that the autocomplete function simply offers the terms most searched for by users. But that’s a troubling answer, because by trying to engineer such an automated function as neutral, Facebook was reproducing the sexist behaviour of many of its users, which in this way was being automated and reinforced and was being offered as the normal thing to do to users who had not shown a sexist bias.

The two last current examples in the OASI Register help show how widespread gender discrimination is in the algorithmic field. One of them involves another of the big tech companies, Microsoft, who in 2016 launched a Twitter account named Tay and managed by an algorithmic system. Tay was supposed to talk like a teenage girl and it was meant to learn as it interacted with people on social media.

At the beginning, things looked OK and Tay seemed to talk like a teenage girl. However, after just a few hours of use, Tay started posting messages that were explicitly sexist, as well as racist and anti-Semitic. Microsoft’s answer was along the lines of that of Facebook described above. Microsoft said that the algorithm responded that way because human Twitter users were talking to Tay in a sexist, racist and anti-Semitic way. Which again brings about the question of how to deal with algorithms that aim to be neutral but promptly start replicating abusive human behaviour because such behaviour is very common online.

The final case is a cautionary tale. In 2013, two organisations working about homelessness launched VI-SPDAT, an algorithmic system designed to help civil servants or community workers in the process of providing housing services to homeless people. The algorithm, which was free to use, was intended as the first step in the complex process of assessing each individual case.

However, in the real world, where civil servants and NGO workers are overwhelmed and work under a lot of stress, VI-SPDAT was mostly used as the only step in deciding whether to offer housing or not to someone. That turned the whole process into an automatised one that provided standard answers to very diverse cases, and which also tended to discriminate against women (and other disadvantaged groups), as other algorithms tend to do. In December 2020, the developers of VI-SPDAT said they regretted its misuse and that they would stop supporting the algorithm. However, all the organisations who had downloaded it and were using it are still free to keep doing so on their own.

Gender discrimination is probably the most prevalent way of discrimination in the algorithmic field, and that’s because gender discrimination is probably the most prevalent way of discrimination in the human world, from which AI and algorithms come. And has happens about gender, algorithmic systems may reproduce and reinforce other unjust and discriminatory biases present in the way human societies behave and in all the data we’ve been generating.

Algorithms should be explainable, so that we all affected by their use can understand how the algorithms work, and so that we can hold those responsible to account. Eticas Foundation’s OASI project is an effort in that direction. On the OASI pages you can read more about algorithms and their social impact, and in the Register you can browse an ever-growing list of algorithms sorted by different kinds of categories. And if you know about an algorithmic system that’s not in the Register and you think it should be added, please let us know.